RAID

This article needs additional citations for verification. (September 2007) |

Redundant Arrays of Inexpensive Disks, as named by the inventors and commonly referred to as RAID, is a technology that supports the integrated use of 2 or more hard-drives in various configurations for the purposes of achieving greater performance, reliability through redundancy, and larger disk volume sizes through aggregation. Other definitions of RAID include "Redundant Arrays of Independent Disks", "Redundant Arrays of Independent Drives", and "Redundant Arrays of Inexpensive Drives". RAID is an umbrella term for computer data storage schemes that divide and replicate data among multiple hard disk drives. RAID's various designs balance or accentuate two key design goals: increased data reliability and increased I/O (input/output) performance.

A number of standard schemes have evolved which are referred to as levels. There were five RAID levels originally conceived, but many more variations have evolved, notably several nested levels and many non-standard levels (mostly proprietary).

RAID combines physical hard disks into a single logical unit by using either special hardware or software. Hardware solutions often are designed to present themselves to the attached system as a single hard drive, and the operating system is unaware of the technical workings. Software solutions are typically implemented in the operating system, and again would present the RAID drive as a single drive to applications.

There are three key concepts in RAID: mirroring, the copying of data to more than one disk; striping, the splitting of data across more than one disk; and error correction, where redundant data is stored to allow problems to be detected and possibly fixed (known as fault tolerance). Different RAID levels use one or more of these techniques, depending on the system requirements. The main aims of using RAID are to improve reliability, important for protecting information that is critical to a business, for example a database of customer orders; or where speed is important, for example a system that delivers video on demand TV programs to many viewers.

The configuration affects reliability and performance in different ways. The problem with using more disks is that it is more likely that one will go wrong, but by using error checking the total system can be made more reliable by being able to survive and repair the failure. Basic mirroring can speed up reading data as a system can read different data from both the disks, but it may be slow for writing if the configuration requires that both disks must confirm that the data is correctly written. Striping is often used for performance, where it allows sequences of data to be read from multiple disks at the same time. Error checking typically will slow the system down as data needs to be read from several places and compared. The design of RAID systems is therefore a compromise and understanding the requirements of a system is important. Modern disk arrays typically provide the facility to select the appropriate RAID configuration.

RAID systems can be designed to keep working when there is failure - disks can be hot swapped and data recovered automatically while the system keeps running. Other systems have to be shut down while the data is recovered. RAID is often used in high availability systems, where it is important that the system keeps running as much of the time as possible.

RAID is traditionally used on servers, but can be also used on workstations. The latter was once common in storage-intensive applications such as video and audio editing, but has become less advantageous with the advent of large, fast, and inexpensive hard drives based on perpendicular recording technology.

History

Norman Ken Ouchi at IBM was awarded a 1978 U.S. patent 4,092,732[1] titled "System for recovering data stored in failed memory unit." The claims for this patent describe what would later be termed RAID 5 with full stripe writes. This 1978 patent also mentions that disk mirroring or duplexing (what would later be termed RAID 1) and protection with dedicated parity (that would later be termed RAID 4) were prior art at that time.

The term RAID was first defined by David A. Patterson, Garth A. Gibson and Randy Katz at the University of California, Berkeley in 1987. They studied the possibility of using two or more drives to appear as a single device to the host system and published a paper: "A Case for Redundant Arrays of Inexpensive Disks (RAID)" in June 1988 at the SIGMOD conference.[2] This specification suggested a number of prototype RAID levels, or combinations of drives. Each had theoretical advantages and disadvantages. Over the years, different implementations of the RAID concept have appeared. Most differ substantially from the original idealized RAID levels, but the numbered names have remained. This can be confusing, since one implementation of RAID 5, for example, can differ substantially from another. RAID 3 and RAID 4 are often confused and even used interchangeably.

Their paper formally defined RAID levels 1 through 5 in sections 7 to 11:

- First level RAID: mirrored drives

- Second level RAID: Hamming code for error correction

- Third level RAID: single check disk per group

- Fourth level RAID: independent reads and writes

- Fifth level RAID: spread data/parity over all drives (no single check disk)

Standard levels

A quick summary of the most commonly used RAID levels:

| Level | Description | Minimum # of disks | Image |

|---|---|---|---|

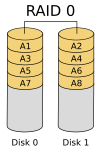

| RAID 0 | Striped set without parity. Provides improved performance and additional storage but no fault tolerance. Any disk failure destroys the array, which becomes more likely with more disks in the array. A single disk failure destroys the entire array because when data is written to a RAID 0 drive, the data is broken into fragments. The number of fragments is dictated by the number of disks in the drive. The fragments are written to their respective disks simultaneously on the same sector. This allows smaller sections of the entire chunk of data to be read off the drive in parallel, giving this type of arrangement huge bandwidth. When one sector on one of the disks fails, however, the corresponding sector on every other disk is rendered useless because part of the data is now corrupted. RAID 0 does not implement error checking so any error is unrecoverable. More disks in the array means higher bandwidth, but greater risk of data loss. | 2 |

|

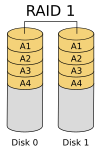

| RAID 1 | Mirrored set without parity. Provides fault tolerance from disk errors and single disk failure. Increased read performance occurs when using a multi-threaded operating system that supports split seeks, very small performance reduction when writing. Array continues to operate so long as at least one drive is functioning. | 2 |

|

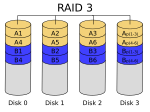

| RAID 3 | Striped set with dedicated parity. This mechanism provides an improved performance and fault tolerance similar to RAID 5, but with a dedicated parity disk rather than rotated parity stripes. The single disk is a bottle-neck for writing since every write requires updating the parity data. One minor benefit is the dedicated parity disk allows the parity drive to fail and operation will continue without parity or performance penalty. | 3 |

|

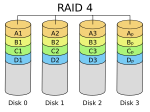

| RAID 4 | Identical to RAID 3 but does block-level striping instead of byte-level striping. | 3 |

|

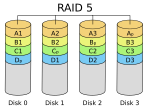

| RAID 5 | Striped set with distributed parity. Distributed parity requires all but one drive to be present to operate; drive failure requires replacement, but the array is not destroyed by a single drive failure. Upon drive failure, any subsequent reads can be calculated from the distributed parity such that the drive failure is masked from the end user. The array will have data loss in the event of a second drive failure and is vulnerable until the data that was on the failed drive is rebuilt onto a replacement drive. | 3 |

|

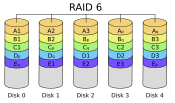

| RAID 6 | Striped set with dual distributed parity. Provides fault tolerance from two drive failures; array continues to operate with up to two failed drives. This makes larger RAID groups more practical, especially for high availability systems. This becomes increasingly important because large-capacity drives lengthen the time needed to recover from the failure of a single drive. Single parity RAID levels are vulnerable to data loss until the failed drive is rebuilt: the larger the drive, the longer the rebuild will take. With dual parity, it gives time to rebuild the array without the data being volatile while the failed drive is being recovered. | 4 |

|

Nested levels

Many storage controllers allow RAID levels to be nested. That is, one RAID that can use another as its basic element, instead of using physical drives. It is instructive to think of these arrays as layered on top of each other, with physical drives at the bottom.

Nested RAIDs are usually signified by joining the numbers indicating the RAID levels into a single number, sometimes with a '+' in between. For example, RAID 10 (or RAID 1+0) conceptually consists of multiple level 1 arrays stored on physical drives with a level 0 array on top, striped over the level 1 arrays. In the case of RAID 0+1, it is most often called RAID 0+1 as opposed to RAID 01 to avoid confusion with RAID 1. However, when the top array is a RAID 0 (such as in RAID 10 and RAID 50), most vendors choose to omit the '+', though RAID 5+0 is more informative.

- RAID 0+1: striped sets in a mirrored set (minimum 4 disks; even number of disks) provides fault tolerance and improved performance but increases complexity. The key difference from RAID 1+0 is that RAID 0+1 creates a second striped set to mirror a primary striped set. The array continues to operate with one or more drives failed in the same mirror set, but if two or more drives fail on different sides of the mirroring, the data on the RAID system is lost.

- RAID 1+0: mirrored sets in a striped set (minimum 4 disks; even number of disks) provides fault tolerance and improved performance but increases complexity. The key difference from RAID 0+1 is that RAID 1+0 creates a striped set from a series of mirrored drives. In a failed disk situation RAID 1+0 performs better because all the remaining disks continue to be used. The array can sustain multiple drive losses as long as no two drives lost comprise a single pair of one mirror.

- RAID 5+0: stripe across distributed parity RAID systems

- RAID 5+1: mirror striped set with distributed parity (some manufacturers label this as RAID 53).

Non-standard levels

Given the large amount of custom configurations available with a RAID array, many companies, organizations, and groups have created their own non-standard configurations, typically designed to meet at least one but usually very small niche groups of arrays. Most of these non-standard RAID levels are proprietary.

Some of the more prominent modifications are:

- CalDigit Inc HDPro adds parity RAID protection (RAID 5 and RAID 6) to subsystems which provides high-speed data transfer rate for 2K film, uncompressed high-definition video, standard-definition video, DVCProHD and HDV editing.

- ATTO Technology's DVRAID adds parity RAID protection to systems which demand performance for 4K film, 2K film, high-definition audio and video.

- Storage Computer Corporation uses RAID 7, which adds caching to RAID 3 and RAID 4 to improve I/O performance.

- EMC Corporation offered RAID S as an alternative to RAID 5 on their Symmetrix systems (which is no longer supported on the latest releases of Enginuity, the Symmetrix's operating system).

- Solaris, OpenSolaris and FreeBSD offer RAID-Z with the ZFS filesystem, which solves RAID 5's write hole problem.

- Network Appliance's Data ONTAP uses RAID-DP (also referred to as "double", "dual" or "diagonal" parity), which is a form of RAID 6, but unlike many RAID 6 implementations, does not use distributed parity as in RAID 5. Instead, two unique parity disks with separate parity calculations are used. This is a modification of RAID 4 with an extra parity disk.

- Accusys Triple Parity (RAID TP) implements three independent parities by extending formula of standard RAID 6 algorithms on its FC-SATA and SCSI-SATA RAID controllers so as to tolerate three-disk failure.

Implementations

The distribution of data across multiple drives can be managed either by dedicated hardware or by software. Additionally, there are hybrid RAIDs that are partially software and hardware-based solutions.

Software-based

Software implementations are now provided by many operating systems. A software layer sits above the (generally block-based) disk device drivers and provides an abstraction layer between the logical drives (RAID arrays) and physical drives. Most common levels are RAID 0 (striping across multiple drives for increased space and performance) and RAID 1 (mirroring two drives), followed by RAID 1+0, RAID 0+1, and RAID 5 (data striping with parity).

Since the software must run on a host server attached to storage, the processor (as mentioned above) on that host must dedicate processing time to run the RAID software. Like hardware-based RAID, if the server experiences a hardware failure, the attached storage could be inaccessible for a period of time.

Software implementations, especially LVM-like, can allow RAID arrays to be created from partitions rather than entire physical drives. For instance, Novell NetWare allows you to divide an odd number of disks into two partitions per disk, mirror partitions across disks and stripe a volume across the mirrored partitions to emulate a RAID 1E configuration. Using partitions in this way also allows mixing reliability levels on the same set of disks. For example, one could have a very robust RAID-1 partition for important files, and a less robust RAID-5 or RAID-0 partition for less important data. (Intel calls this Intel Matrix RAID.) Using two partitions on the same drive in the same RAID array is, however, dangerous. If, for example, a RAID 5 array is composed of four drives 250 + 250 + 250 + 500 GB, with a 500 GB drive split into two 250 GB partitions, a failure of this drive will remove two partitions from the array, causing all of the data held on it to be lost.

Because these controllers often try to give the impression of being hardware RAID controllers, they can be known as Fake RAID. They do actually implement genuine RAID; the only faking is that they do it in software.

Hardware-based

Since these controllers use proprietary disk layouts, they typically cannot span controllers from multiple manufacturers. Two advantages over software RAID are that the BIOS can boot from them, and that tighter integration with the device driver may offer better error handling.

A hardware implementation of RAID requires at a minimum a special-purpose RAID controller. On a desktop system, this may be a PCI expansion card, PCI-Express Expansion Card or might be a capability built into the motherboard. Any drives may be used - IDE/ATA, SATA, SCSI, SSA, Fibre Channel, sometimes even a combination thereof. In a large environment the controller and disks may be placed outside of a physical machine, in a stand alone disk enclosure. The using machine can be directly attached to the enclosure in a traditional way, or connected via SAN. The controller hardware handles the management of the drives, and performs any parity calculations required by the chosen RAID level.

Most hardware implementations provide a read/write cache which, depending on the I/O workload, will improve performance. In most systems write cache may be non-volatile (e.g. battery-protected), so pending writes are not lost on a power failure.

Hardware implementations provide guaranteed performance, add no overhead to the local CPU complex and can support many operating systems, as the controller simply presents a logical disk to the operating system.

Hardware implementations also typically support hot swapping, allowing failed drives to be replaced while the system is running.

Inexpensive RAID controllers have become popular that are simply a standard disk controller with a BIOS extension implementing RAID in software for the early part of the boot process. A special operating system driver then takes over the raid functionality when the system switches into protected mode.

Hot spares

Both hardware and software implementations may support the use of hot spare drives, a pre-installed drive which is used to immediately (and automatically) replace a drive that has failed, by rebuilding the array onto that empty drive. This reduces the mean time to repair period during which a second drive failure in the same RAID redundancy group can result in loss of data, though it doesn't eliminate it completely; array rebuilds still take time, especially on active systems. It also prevents data loss when multiple drives fail in a short period of time, as is common when all drives in an array have undergone similar use patterns, and experience wear-out failures. This can be especially troublesome when multiple drives in a RAID set are from the same manufacturer batch.

Reliability terms

- Failure rate

- The mean time to failure (MTTF) or the mean time between failure (MTBF) of a given RAID is the same as those of its constituent hard drives, regardless of what type of RAID is employed.

- Mean time to data loss (MTTDL)

- In this context, the average time before a loss of data in a given array.[3]. Mean time to data loss of a given RAID should be higher, but can be lower than that of its constituent hard drives, depending upon what type of RAID is employed.

- Mean time to recovery (MTTR)

- In arrays that include redundancy for reliability, this is the time following a failure to restore an array to its normal failure-tolerant mode of operation. This includes time to replace a failed disk mechanism as well as time to re-build the array (i.e. to replicate data for redundancy).

- Unrecoverable bit error rate (UBE)

- This is the rate at which a disk drive will be unable to recover data after application of cyclic redundancy check (CRC) codes and multiple retries.

- Write cache reliability

- Some RAID systems use RAM write cache to increase performance. Failure of the RAM can lose data.

- Atomic write failure

- Also known by various terms such as torn writes, torn pages, incomplete writes, interrupted writes, non-transactional, etc.

Issues with RAID

Correlated failures

The theory behind the error correction in RAID assumes that failures of drives are independent. Given these assumptions it is possible to calculate how often they can fail and to arrange the array to make data loss arbitrarily improbable.

In practice, the drives are often the same ages, with similar wear. Since many drive failures are due to mechanical issues which are more likely on older drives, this violates those assumptions and failures are in fact statistically correlated. In practice then, the chances of a second failure before the first has been recovered is not nearly as unlikely as might be supposed, and data loss can in practice occur at significant rates.[4]

Atomicity

This is a little understood and rarely mentioned failure mode for redundant storage systems that do not utilize transactional features. Database researcher Jim Gray wrote "Update in Place is a Poison Apple" during the early days of relational database commercialization. However, this warning largely went unheeded and fell by the wayside upon the advent of RAID, which many software engineers mistook as solving all data storage integrity and reliability problems. Many software programs update a storage object "in-place"; that is, they write a new version of the object on to the same disk addresses as the old version of the object. While the software may also log some delta information elsewhere, it expects the storage to present "atomic write semantics," meaning that the write of the data either occurred in its entirety or did not occur at all.

However, very few storage systems provide support for atomic writes, and even fewer specify their rate of failure in providing this semantic. Note that during the act of writing an object, a RAID storage device will usually be writing all redundant copies of the object in parallel, although overlapped or staggered writes are more common when a single RAID processor is responsible for multiple drives. Hence an error that occurs during the process of writing may leave the redundant copies in different states, and furthermore may leave the copies in neither the old nor the new state. The little known failure mode is that delta logging relies on the original data being either in the old or the new state so as to enable backing out the logical change, yet few storage systems provide an atomic write semantic on a RAID disk.

While the battery-backed write cache may partially solve the problem, it is applicable only to a power failure scenario.

Since transactional support is not universally present in hardware RAID, many operating systems include transactional support to protect against data loss during an interrupted write. Novell Netware, starting with version 3.x, included a transaction tracking system. Microsoft introduced transaction tracking via the journalling feature in NTFS. NetApp WAFL file system solves it by never updating the data in place, as does ZFS.

Unrecoverable data

This can present as a sector read failure. Some RAID implementations protect against this failure mode by remapping the bad sector, using the redundant data to retrieve a good copy of the data, and rewriting that good data to the newly mapped replacement sector. The UBE rate is typically specified at 1 bit in 1015 for enterprise class disk drives (SCSI, FC, SAS) , and 1 bit in 1014 for desktop class disk drives (IDE, ATA, SATA). Increasing disk capacities and large RAID 5 redundancy groups have led to an increasing inability to successfully rebuild a RAID group after a disk failure because an unrecoverable sector is found on the remaining drives. Double protection schemes such as RAID 6 are attempting to address this issue, but suffer from a very high write penalty.

Write cache reliability

The disk system can acknowledge the write operation as soon as the data is in the cache, not waiting for the data to be physically written. However, any power outage can then mean a significant data loss of any data queued in such cache.

Often a battery is protecting the write cache, mostly solving the problem. If a write fails because of power failure, the controller may complete the pending writes as soon as restarted. This solution still has potential failure cases: the battery may have worn out, the power may be off for too long, the disks could be moved to another controller, the controller itself could fail. Some disk systems provide the capability of testing the battery periodically, which however leaves the system without a fully charged battery for several hours.

See also

- RAID controller

- Disk array

- Vinum volume manager

- Storage area network (SAN)

- Hard disk

- Redundant Array of Inexpensive Nodes

- Redundant Array of Independent Filesystems

- Redundant Array of Inexpensive Servers

- Disk Data Format (DDF)

- Redundant Arrays of Hybrid Disks (RAHD)

References

- ^ U.S. patent 4,092,732

- ^

Patterson, David (1988). "A Case for Redundant Arrays of Inexpensive Disks (RAID)" (PDF). SIGMOD Conference. pp. pp 109–116.

{{cite conference}}:|pages=has extra text (help); Unknown parameter|booktitle=ignored (|book-title=suggested) (help); Unknown parameter|coauthors=ignored (|author=suggested) (help) retrieved 2006-12-31 - ^ Jim Gray and Catharine van Ingen, "Empirical Measurements of Disk Failure Rates and Error Rates", MSTR-2005-166, December 2005

- ^ Disk Failures in the Real World: What Does an MTTF of 1,000,000 Hours Mean to You? Bianca Schroeder and Garth A. Gibson

Further reading

- Charles M. Kozierok (2001-04-17). "Redundant Arrays of Inexpensive Disks". The PC Guide. Pair Networks.

{{cite web}}: Check date values in:|date=(help)

External links

- RAID Disk Space Calculator

- Working RAID illustrations

- RAID Levels — Tutorial and Diagrams

- Slashdot: Which RAID for a Personal Fileserver? — Comments from network specialists and enthusiasts.

- Logical Volume Manager Performance Measurement

- Animations and Descriptions to help Learn about RAID Levels 0, 1, 5, 10, and 50

- Raid Calculator

- Raid Controller (German)

White papers

There has been a significant amount of research done into the technical aspects of this storage method. Technical institutions and involved companies have released white papers and technical documentation relevant to RAID arrays and made them available to the public. They are accessible below

- Tutorial on Reed-Solomon Coding for Fault-Tolerance in RAID-like Systems

- Parity Declustering for Continuous Operation in Redundant Disk Arrays

- An Optimal Scheme for Tolerating Double Disk Failures in RAID Architectures

Operating system-specific details

If you would like more information detailing the deployment, maintenance, and repair of RAID arrays on a specific operating system, the external links below, sorted by operating system, could prove useful.

- IBM - seriesI/iSeries/AS400 Running OS400/i5OS

- Novell

- Linux

- Linux Software-RAID HOWTO

- RAID-1 QUICK Howto under Linux (Note: Site appears to be down as of 5/21/2007).

- Experiences w/ Software RAID 5 Under Linux? — "Ask Slashdot" article on RAID 5.

- Optimal Hardware RAID Configuration for Linux. Make it up to 30% faster by aligning the file system to the RAID stripe structure.

- Growing a RAID 5 array Blog post which describes using mdadm to grow a RAID 5 array to include more disk space.

- Linux Software Raid 1 Setup

- Microsoft Windows

- Basic Storage Versus Dynamic Storage in Windows XP RAID functionality built into Windows 2000/XP

- Windows Software RAID Guide