User talk:Black Carrot

Welcome!

[edit]\'\'\'WELCOME!!\'\'\' Hello, Black Carrot! I want to personally welcome you on behalf of the Wikipedia community. I hope you like the place and decide to stay. If you haven\'t already, you can put yourself in the new user log and thelist of users so you can be properly introduced to everyone. Don\'t forget to be bold, and don\'t be afraid of hungry Wikipedians...there\'s a rule about not biting newcomers. Some other good links are the tutorial, how to edit a page, or if you\'re really stuck, see the help pages. Wikipedia is held up by Five Pillars...I recommend reading about them if you haven\'t already. Finally, it would be really helpful if you would sign your name on talk pages, so people can get back to you quickly. It\'s easy to do this by clicking the button (next to the one with the \"W\" crossed out) one from the end on the left. If that\'s confusing, or if you have any questions, feel free to drop me a ♪ at my talk page (by clicking the plus sign (+) next to the tab at the top that says \"edit this page\")...and again, welcome!--Violin\'\'\'\'\'G\'\'\'\'\'irl♪ 16:23, 23 December 2005 (UTC)

Neural Net Image Categorization

[edit](Moved from Reference Desk: Science)

Most of the information I can find on neural nets is either very basic and general, or owned by a company and unavailable to outsiders. Where can I find information on the construction of neural nets that leans towards the conceptual (I only know Java, and don't have time to decipher other languages) and towards a large number of inputs, say on the order of millions? Black Carrot 21:46, 6 January 2006 (UTC)

- I hope you realize such a neural net will require massive computing power to run at a reasonable speed. Also, what is the application ? Fluid dynamics ? StuRat 22:38, 6 January 2006 (UTC)

- I do realize that it will take some work to apply it the way I'm hoping to, but I don't think it's impossible, and I'm certainly willing to try. There are worse ways to waste time. I especially hope to find ways, as you mention, to reduce the number of inputs to a more manageable level, but I don't think I can go below the tens of thousands. I'm trying to find a way to search the web for actual images. Google is great, but it only does keyword searches, and I would like to be able to do more than that. I'd like something that can sepearate a set of pictures into a Yes pile and a No pile accurately, such as Tree v Not Tree. Naturally, neural nets lept to mind. The structures I've found so far, however, don't lend themselves well to this. It's not that they can't be set to sort into the right piles, it's that you have to have the piles sorted in the first place for backpropagation to work, and then the setup is fairly rigid, not dealing well with cases outside its specific expertise, and not dealing too well with new cases being added. The ideal search, though, would involve a progressive narrowing down, and would anticipate related cases. I've come up with some things I haven't seen anyone write about that I think would help, but I think things would go a lot faster if I could find out what the people who've been working on this for years have thought of. Any suggestions are appreciated. Black Carrot 01:04, 7 January 2006 (UTC)

- Wow, that's quite an ambitious project. It sounds like the problems you are having are quite similar to those for voice recognition software. Specifically, the software can recognize the difference between "circumvent" and "circumnavigate" if programmed specifically for that task and adjusted for a given voice, but does a poor job of identifying a random word in a random voice. Also, such software needs to "learn" differences between similar words, which requires a great deal of user input to "train" it. I'm not even sure how you would define which picture is a tree and which is not, considering cases like a tree and a person, a tree branch, a tree sprout, a bush, a flower, etc.

- I have thought of a much less ambitious search method, which could search for copies of an exact picture, possibly with a different scale. This could compare number of pixels of each color and look for a given ratio, as well as looking for colors to be in the same relative position on the pic. Complications such as mirror images, pics trimmed differently, non-uniform scaling, and different color balances would require quite a bit of coding to solve, but does seem doable. I've often found a pic via a web search which is just what I want as far as subject, but is too small. I would like a way to search for a full sized copy of the pic, even if the page doesn't contain the keywords I used in the initial picture search. Is that a project which would interest you ? StuRat 01:31, 7 January 2006 (UTC)

- 'Fraid not. I would expect that to be one of the functions of what I'm going for, but it's not by itself what I want. Besides, how many duplicate pictures are there floating around?

- You see the problem pretty clearly. What I think I have to go for is something that, in its structure, mimics the way we see things. Not that it mimics the brain, that would be a waste of time and I don't have the background anyway. Here's how I see it. The integer inputs(from 0-255 in Java), form a massively multidimensional graph, at each point of which the output(a real number, ideally either 0 or 1) can be represented by a color, either red or blue, with black or white at the boundaries. Makes a nice, manageable picture, except for the countless thousands of unimaginable axes. I think 'phase space' is the technical name for things like this. Now, the most basic form of neural net (sans sigmoid) will draw a beautiful diagonal gradient, which is useless to me. A single-layer network that makes use of the sigmoid function will have a straight line(plane, hyperplane, however many dimensions) between one clear area of blue and one clear area of red, at any slant and position you want. Good start. A two-layer one will draw as many of those as you want(with colors strengthening each other where they overlap), then shove all the outputs above a certain value to one color, and all the ones below it to another, and you have a shape on the graph, most any basic shape you want. Great. Add another layer, and you can have a bunch of shapes scattered across the graph, making it nice and flexible. Now, within those shapes the output will be 0(No), and beyond them it will be 1(Yes), or vice versa, and you can clearly see which pictures(which points) will be accepted and which will be rejected. Dandy. Except that that's not what the graph needs to look like to mimic the trends in the positions of actual images. It's hard to say exactly what it would look like, but I can get the concepts down and let the program take care of the rest. A few characteristics of the goal graph: area around image points, axis-parallel lines out from points, perpendicular rotation of shape about origin, image point shadows, threads between points, and quite a few others I haven't nailed down. I've solved the first one. The idea there is that, for any clear picture, there is some amount of error or static you can add to each pixel and still keep the picture essentially and recognizably what it is. On the graph, this means that there is a certain distance out you can go in any direction (direction=one color of one pixel in the image, or movement parallel to an axis) or combination of directions from the point that represents the clear image, and still be in essentially the same place. So, if that point is one color, the area around it must be as well, but not the area beyond that. It's possible to draw a nice simple square/box/hypercube around that area with a two-level bit of neural net, but with the number of sides needed(two per axis, >80000 axes), that box is prohibitively expensive in terms of how many nodes the net contains. I eventually worked out that if, instead of multiplying each input by a weight, then summing them and running them through the sigmoid, you add a bias to each input, square each result, then do all the rest, it draws a nice little resizable egg of whichever color around any point you want, with one layer, startlingly few nodes, and the added benefit that the list of the biases, laid out in a rectangle, is the clear picture you started with. So, that's where I'm coming from. Any suggestions? --Black Carrot 03:59, 7 January 2006 (UTC)

Wow, you've really given this a lot of thought, I'm impressed. I still think the number of calculations necessary to search all of Google for all pictures of trees would take way too long to be practical for a search at present, but perhaps it would be good to have the technology ready and waiting for when such computing capacity comes along. Of course, just like voice recognition, I doubt if once you have the program optimized to find trees if it will be any good at finding, say, birds, until you alter the program significantly, then the same for every other object it needs to recognize.

I think some of the steps necessary for this to work might be valuable in and of themselves, however. I listed one above, another that interests me is "reverse pixelization". That is, I would like to be able to take a bitmap of a line and a circle, say, and create a vector representation of the geometric elements. One application would be to take a low res picture and generate a higher res pic of the same thing. Edge recognition is one aspect of any such program, that might be mentioned under machine vision.

Well, as I say, I'm quite skeptical that you will get the full program to work anytime soon, but still think it is valuable for it's side benefits. And, if you can write and sell such a program, I'm sure it would be worth millions! StuRat 06:14, 7 January 2006 (UTC)

- I have done this before. The approach outlined is feasible, but I think you need to make your project a bit more well defined before you will be able to achieve much. It seems that you know what you need to look for, but to really get your project up and running, I would suggest that you simplify the problem first before proceeding. Try this: take a 8 by 8 grid, and see if you can create a neural network which can distinguish the characters A, O and E written on the grid in a pixelated form. Also, for your tree recognition scheme, you may want to consider alternative measures of classification which do not rely on the network of sigmoidal functions. There are plenty of quality papers on complexity analysis, image processing which will be handy. You may also want to search for imaging journals which deal specifically with diagnosis and such - many image processing/recognition algorithms are well established and used in the medical field. --HappyCamper 06:23, 7 January 2006 (UTC)

- I definitely agree with starting with small, simple tasks and working your way up. Then again, this approach is recommended for any complex problem. StuRat 07:29, 7 January 2006 (UTC)

- You might wanna look into Military Image Recognition Systems. --Jvh, 7 January 2006

Neural nets are (amoungst other, equivilent descriptions) a statistics object. It might be worth persuing them from that angle, particularly if you're looking for rigourus descriptions. Also, with the resoulution upscaling, there's a lot of work on statistics applied to images that would be useful background reading. Syntax 22:42, 7 January 2006 (UTC)

- StuRat- I don't see why a well-designed system would take any more processing than what they already do, which is substantial in itself. Actually running something once through a net doesn't take much, and training it isn't that different from running something through it a few thousand times, so although I wouldn't be able to set it up on my laptop, I think the technology to do so is already sitting in Google's basement. Also, what I was talking about above is a system for describing the entire theoretical graph of all images in existence. Once I've got some idea of how that would work(like the example above), an idea of what mathematical and computational structure it would take to efficiently seperate all recognizable images from the near infinity of static surrounding them(and it is near infinity, try calculating the area of the graph), I'm pretty sure any net I base on it will work for all search terms. If you meant, once I'd done a search on trees it would be hard to move it to something else, that's no problem. I expect to start with a blank net each time I run a search anyway. I believe software is available that turns pictures into collections of vectors, if you're looking for it. My brother is into art, and his pad does that. As to the selling- I hope so, but I kind of like the idea of providing it for free. Also, according to the google searches I've been doing for hints on how to build nets, there are companies that are already marketing things a lot like this, and I'm not interested in competing for business.

- HappyCamper- What do you mean by 'done this before'? Which bit? Professionally, or as a hobby? And what about the outline is feasible? What's undefined about it? Is it important to use A, O and E specicifically, or any set of letters? Or all letters? Using specific drawings of them, or a range of styles? What papers or how-tos, specifically, would you recommend, and how do I get to them?

- Jvh- I wouldn't think they'd be sharing any of that information, but if you know how I can get it, I'd love to learn it.

- Syntax- ???

- Also, six clarifying statements, which I think should be more common in long discussions: I would prefer to use just neural nets, I don't care about the complexities of optimization until I have something up and running, I (as a highschooler) don't have access to anything and don't have experience finding it, I'm pretty sure going below 150x150 pixels would radically change the structure of what I'm doing (how we recognize things begins to change at that level) which means anything that works at 8x8 has limited application except as general practice, I support general practice as a way of getting an intuitive grasp of a system and have been doing it for quite a while already, and I don't care about most of the things the papers I can get to are about, like facial recognition and 3D recognition, just making a reliable searching tool.

- One further question: does anyone know how to take a y=1/x graph and get a higher-dimensional version of it? I've decided I can combine two functions(area around image points, axis-parallel lines out from points) by taking each point that represents a clear image, and drawing curves asymptotically out in all axial directions. With three inputs(axes,dimensions), this would resemble six cones attached to the corners of an octahedron. With two inputs, this would resemble the graphs y=1/x and y=-1/x combined, meaning that if I made a two-input, two-level, three-node net out of the formulae O1= I1*12, O2= -I1*I2, and O3= O1+O2, or more accurately, O1= sig(B1+W1*((I1-B2)*(I2-B3))), O2= sig(B1-W1((I1-B2)*(I2-B3))), and O3= sig(-W3(O1+O2-.5)), where W means weight and B means bias, I would have what I wanted. This has proved difficult to extend to three inputs, and beyond. Another I need, beside the one above, is another way of taking 1/x to higher dimensions, three planes perpendicular to each other with curves asympotically approaching them, which would resemble a cube with all six corners carved out.

- I appreciate all the help. --Black Carrot 23:06, 7 January 2006 (UTC)

- BTW, since this is getting pretty long, should we move it to my talk page? --Black Carrot 23:13, 7 January 2006 (UTC)

More responses

[edit]I was wondering where this post ended up...it sounds like you could use a pretty well grounded textbook in artificial intelligence...here try this one: [1] :-) . The letters A, O and E do not matter, I just picked them out from thin air. Your neural network should be sufficiently good that it can detect a range of styles for these letters. You'll need at least one layer of hidden nodes to do this. --HappyCamper 05:55, 10 January 2006 (UTC)

Computer Picture Recognition

[edit]Continuing discussion here per your request....

One pt I made earlier bears repeating and elaboration. Unlike voice recognition, where there would be considerable agreement from listeners on when a word is "tree" and when it is not, I don't believe there will be much agreement on when a random picture from Google is of a tree or not. Keep in mind that many pictures found via Google will be a part of a tree, many trees, a tree and something else, something that looks like a tree (like a frost pattern or a tree diagram), etc. Also, trees take many forms, some with lots of branches and some with no apparent branches, like a palm tree. Those with branches may also have those branches totally obscured by leaves or, in the case of fir trees, needles and possibly snow. So, developing a single method for identifying all trees seems impossible. You would rather need a collection of many recognition methods. For example, one for fir trees, one for deciduous trees with leaves, one for deciduous trees without leaves, and one for palm trees. And how would you classify a saguaro cactus, is that a tree ? I really think this problem is far more complex than you realize. However, I'm impressed with your knowledge on the subject, especially for a high school student. I just don't want you to get frustrated by taking on an impossible task, hence my advice (and somebody else's) that you start on some manageable task, then slowly add to it. StuRat 09:51, 9 January 2006 (UTC)

CNTD

[edit]I apprecitate your concern, but I don't think there is any way to start small on this. It's an all-or-nothing proposition.

I think the problem isn't quite in the form you think it is. It's not a matter of building up a library of terms, or of translating a word into picture form. I just used Tree v Not Tree because it seemed clear. It's a matter of getting what's in a person's head out of the mass of pictures available. This would most likely involve them going through screens of google-like grids of pictures, clicking Yes, No and Ignore for each one. The net would then, as is their talent, figure out for itself what the user sees in them. This method is, of course, open to improvement.

Something that seems to have gotten lost: based on what I'm doing, can you recommend any material I can study for ideas? Black Carrot 13:06, 9 January 2006 (UTC)

- My point is still that, if it isn't clear to a person what constitutes a picture of a tree, then it will be near impossible for a computer to make such a decision. As for materials, this falls under the general category of machine vision, as was mentioned before, so I would start there.

- Another possible application would be a pornography filter that would mark pics with lots of "skin color" pixels as potential porn. I doubt if this type of filter would be very accurate, but perhaps it might be better than nothing. StuRat 03:41, 10 January 2006 (UTC)

- The porn filter application already exists (fascinating discussion, by the way!) -- The institution I work in has this software backgrounded in its web based email system. I frequently use medical images (intraoperative photos etc) in presentations and lectures I give. I usually email them to myself to run them from lecture room computers and I always get messages from the postmaster saying my documents have been flagged as possibly containing porn because of the skin tones in the images. I don't think creating a pron filter would do justice to such an ambitious project. Mattopaedia 13:32, 19 January 2006 (UTC)

- That's small potatos. If I wanted to make a porn filter like that, it had damn well better give your boss a detailed report of what you were looking at complete with links of similar things, and send your wife a letter describing your infidelity, and arrest or execute you on the spot if there's any children in the picture. Of course, I'm against any such thing on principle, so I'm not even going to bother starting it. I might go the other way, though. One question: how do they deal with black people? Black skin is very similar to a lot of non-skin colors, so it seems like it'd be harder to spot consistently than white or light brown skin. Black Carrot 03:27, 21 January 2006 (UTC)

AOE

[edit]HappyCamper- Thanks for suggesting that AOE thing. It's helped a lot. Here's been my thinking on it so far. There are a few basic things the net would have to be able to do. First, it would have to recognize a shape in black on white. Second, it would have to recognize that shape moved around on the board, possibly even rotated. Third, it would have to recognize shapes similar to that shape as being the same. Fourth, it would have to (possibly have to, but I'll get to that) differentiate between different shapes. Fifth, if I want this to bear any resemblance whatsoever to the final product, it would have to at least be able to deal with a greyscale. The last on this list is the first thing I decided to work on.

(dark 0 - 1 light) So, how would this work, having a greyscale? The shape pixels would still be seperate from the non-shape pixels. The shape pixels would still be dark, and the non-shape pixels would still be light. But, if the shape pixels are too similar to the non-shape pixels, there are problems, and if any of the shape pixels is lighter than any of the non-shape pixels, it ceases to be part of the shape. So, I'm thinking it would make sense for the net to accept anything where the pixels that are supposed to be in the letter are at least, say, 0.5 less than the pixels that aren't. That is to say, the lightest of the pixels in the letter are at least 0.5 darker than the darkest of the pixels that aren't in it. The simplest way to do this (which took me a surprising amount of work to realize) would be to just find the min() of all of one group, the max() of all of the rest, subtract one from the other, subtract 0.5, multiply by a huge number and run through the sigmoid. Of course, a slightly better way would be to find the min() of all of them and the max() of all of them, but add either 0 or 2 to everything in the min and either 0 or -2 to everything in the max, with what number each one gets depending on whether it's in the letter. What took me a long time to figure out before I stumbled on the max() thing, was how to do it with more normal functions. I did find a good way, involving absolute value, but I think the max() thing is better for now. --Black Carrot 01:08, 20 January 2006 (UTC)

- It sounds to me that you are trying to combine two approaches into a single system - a neural network based on fuzzy logic. Personally, I've never tried combining two approaches together, so I don't know how feasible the approach is. It's perfectly feasible though, but might be a bit complicated. I think what you want to do is to forget about the greyscale completely, and focus on black and white pixels first. Forget about the orientation of the letters too, and assume from the onset that they are always oriented correctly. In real text procesing systems, this is a reasonable assumption that is made. Text recognition scanners assume that the printed page contains letters which are oriented correctly already. Internally, a lot of systems encode the pixels and characters as ones and zeros - without greyscale. You can get rid of a greyscale with a single threshold node. Some systems then try to recognize lines of words, then the individual words, and then the characters that make up the words. This is based on comparing a database of characters (typically encoded in a matrix) to the digitization of the image using some sort of autocorrelation function. What you can do is to replace this autocorrelation function with your neural network so that the speed is improved. If you are using neural networks, max() and min() are standard functions which are used, so I'd definitely stick with those. It sounds like you're learning a lot of stuff and having fun with your project! I hope this post helps you further. Glad the AOE thing was useful! :-) --HappyCamper 03:41, 21 January 2006 (UTC)

- That wouldn't work, though, because I'm not dealing with binary information, I'm not actually even interested in a greyscale. I'm interested in color pictures. On the graph, binary information is confined to the corners( 0-0, 0-1-1, 0-1-0-1-0-0), which of course aren't hard to seperate from each other. That is totally different from something where the points are scattered throughout the graph, and I don't see any way to transition from one to the other. Also, it is vitally necessary that my net be capable of seeing something (say, an eye) anywhere in the picture, from any orientation as the same thing. Taking something like that on a case-by-case basis(if here, eye; if here, eye; if here, eye...) would be insanely inefficient, and this letter thing seems like a good stepping stone. Incidentally, you never answered the questions from my last post on Neural Net Image Categorization. I have one to add. When most people build neural nets for a purpose, what aspect is being designed? New nodes? Arrangements of nodes? Different training methods? It seems odd to be 'arranging' nodes that are all identical. Black Carrot 17:40, 21 January 2006 (UTC)

- I just read up about fuzzy logic, and I don't see the similarity. What am I missing? Black Carrot 18:45, 21 January 2006 (UTC)

- Um...these posts have been so long that I've developed a sort of "tunnel vision" and forgot what your real question was. I can tell you that I have not encountered any sort of neural network with on the order of a million input nodes being used in a successful application - too many nodes is not a very good thing when it comes to designing neural networks - it means that you have not simplified the problem sufficiently so that the net is only processing data that is "essential" to the problem. When people design neural networks, it is more of an art than a science - all the things you listed above are fiddled with...the training function, the interconnections, the step size of the changes...etc...etc...

- I only brought up fuzzy logic, because you mentioned the max{} and min{} functions, and these are very reminiscent of the methods which are used in fuzzy logic to compute membership functions and such. A neural network can have nodes which output values based on fuzzy logic - that was the connection I was trying to get at. What exactly do you want to do with your neural net? To identify eyes in a photograph? You must have some idea of the sorts of images you are processing. --HappyCamper 06:08, 22 January 2006 (UTC)

I feel your pain. I'm usually constantly reading back over conversations to make sure I didn't miss anything, and I usually have. What neural nets have you encountered in a successful application? What do you do that brings you into contact with them at all?

I do indeed. I know exactly what I want to do with it now, and I know what I wanted to do with it when I started, which are not quite the same. Because this idea was around for a fair few months before I decided to actually do it, and has been going for a long time since then, and because there are a lot of good reasons to do it, it's hard to pinpoint what started it, but I'd say it was when I discovered (much to my disappointment) that Google is entirely incapable of searching the web successfully for pictures of girls in pajama bottoms. I've found a total of three such pictures when not looking for them (with totally other search terms), but never on purpose. This highlights a flaw in Google that I came up against again when trying to do a major English project. We were supposed to find a painting of a person (say, Girl with Pearl Earring), dress up like that person, take a picture as close to identical to that painting as possible, and turn them in with a report. After hours of painful searching on Google, the closest I came to something usable was the Mona Lisa, which I wound up imitating quite passably. What I want to do is find you the pictures you would choose if you had time to go through every picture on the web yourself, and I want to do it in minutes. What I want to do by the end of this school year is get something close enough to that that I can ace my independent study project. Black Carrot 22:04, 22 January 2006 (UTC)

- Independent studies project? Sounds very neat. Neural nets are everywhere even in the most unsuspecting places. Dishwashers, car engines, medical image processing, security systems...the list goes on and on. I've even seen a neural network implemented inside a model car so that it can parallel park by itself. I think it's wonderful that you like this stuff so much. Let me suggest something else, and perhaps this might get your imagination going a bit more. You have a digital camera, right? Try this: design an algorithm that can recognize the colour of traffic lights at an intersection - safe enough, so that if a blind person were to say, take a video of this at an intersection, they will know whether it is a red light or a green light, or a yellow light. Make it so that it works even if it's dark, if it's dusk, if there is a stop sign nearby, if someone is wearing a green shirt. Make it robust enough, so that it can recognize the traffic lights, regardless of how far the picture was taken from the intersection. --HappyCamper 20:02, 23 January 2006 (UTC)

- That's a good project to test myself on, but it's not in essence different from the letters, and I need to solve the same problems to do it. The problem I'm working on now (problem 2 above) is moving something around and warping/rotating it. Here's my thinking, and I welcome your input:

- A case-by-case approach is not the way to go. I have to somehow set it up so that, if net A is trying to recognize shape B(set of inputs C in the proper arrangement) in a picture D, if the shape is anywhere on D (if set of inputs C comes in from any set of pixels that arranges them into the shape B), it runs through A in the same way, with the same output, as if it came in from any other set of pixels This is not an easy thing to do. In fact, other than treating every input identically, I can't see a way to do it. Well, I can, maybe, if I double the memory required by also inputting coordinates along with each pixel. That's really the only way I can see to make it work, but it's still problematic. Black Carrot 20:29, 23 January 2006 (UTC)

- Today, I had what may be a brilliant idea. Here's about how it works: the algorithm crawls through the picture from one pixel to another, in such a way as to keep things that are close to each other related in the processing, without getting the coordinates involved.

- It starts at the upper left, and moves at each step from wherever it is to the pixels directly to the right and directly down. Now, one way of doing that would look like a tree, and would have up to 2^150 branches in a 150x150 picture, which is unreasonable. The other way is more like a net - each time two branches meet at a pixel, they merge, and any information they gained up or to the left is carried down and to the right without wasting memory. The idea is that, as soon as any of them hit something important(say, a dark pixel), they'll start a process that will either continue with the rest of the pixels, or get broken off when the pattern fails to match. Th

- I'm wondering...have you thought about why you want to try a neural net approach as opposed to something else? What you have is an interesting idea. You need to think about the traversal through the pixels though, because if you did this, you would be losing some of the spatial information that is present in the data. It reminds me a lot about JPEG actually... --HappyCamper 12:35, 30 January 2006 (UTC)

- Well, one reason to stay with neural nets is that that's what I said I'd do my project on. Not that my teacher would much mind me changing it. The reason I started it that way was twofold- I thought that was the best way to solve the problems, and neural nets are cool, so I would have wanted to work with them sooner or later anyway. It could be there's a better way. What do you mean about losing spatial information? Black Carrot 20:11, 2 February 2006 (UTC)

Hey again. In case you haven't already done so, you should keep up with the goings on here as there will be a strong interplay between the new Main Page design and ASK. hydnjo talk 03:29, 21 January 2006 (UTC)

Archiving

[edit]For example, this, your own talk page contains entries over a one month period and is thus reasonably easy to navigate, review and keep in your memory. However, as time an discussions progress, your talk page may become so lengthy that you might want to chop off a chunk of stale conversation. Since it's you own talk page you could elect to just delete the "old" stuff and shorten the page that way. Most folks however elect to save those past discussions in their own archive, why not most of us reason, who knows what I may want to reference a year from now. In any event, even if you deleted, it would still be available from your talk page history (there's NO erasing that) so archiving just makes things more convenient. Please don't hesitate to come to my talk page for anything you think I could help with. We really do try to be helpful. :-) hydnjo talk 02:21, 25 January 2006 (UTC)

Main Page Drafts

[edit]I've added links on each draft at for example Draft 6A to all of the other drafts, it's really the only way to make comparisons. If you think this is stupid then let me know, otherwise I'll keep it up. hydnjo talk 03:42, 25 January 2006 (UTC)

New Main Page Election talks

[edit]A discussion has begun on how to handle an official election for replacing the Main Page. To ensure it is set up sensibly and according to consensus, your input is needed there. --Go for it! 22:48, 26 January 2006 (UTC)

Talk:Mentat

[edit]Why are you backslashing single and double quote marks all over the Talk:Mentat mentat page? Justin Johnson 16:56, 22 March 2006 (UTC)

- LOL... thank you for unbackslashing my talk page. Justin Johnson 14:39, 23 March 2006 (UTC)

Misconfigured proxy

[edit](moved from User:Black Carrot 01:30, 15 June 2006 (UTC))

You appear to be using some sort of mis-configured webproxy. Is there any way that you can correct this, please? Best regards, Hall Monitor 18:47, 28 March 2006 (UTC)

Hi. I'm sorry if you were offended by my cleanup action with regard to your post. The prime reason it was removed was that it offered no valid answer to the question that was posted. The q and a page is not designed for people to offer mere opinions, but rather to offer direct answers to questions. Again I am sorry if you were offended.

Peace. Raven.x16 12:43, 14 June 2006 (UTC)

- Again I apologize. Perhaps I was misguided in assuming that the q and a page was designed with a formal approach in mind - with questions and answers not anything else. You have every right to your opinion, and for the record, yes, there are a number of questionable "experiments" in many, if not all fields of science. Now that I am aware of protocol, I will try to follow it.

- ~Peace. Raven.x16 07:16, 15 June 2006 (UTC)

- P.S Sorry about putting it in the wrong place.

Edit Summaries

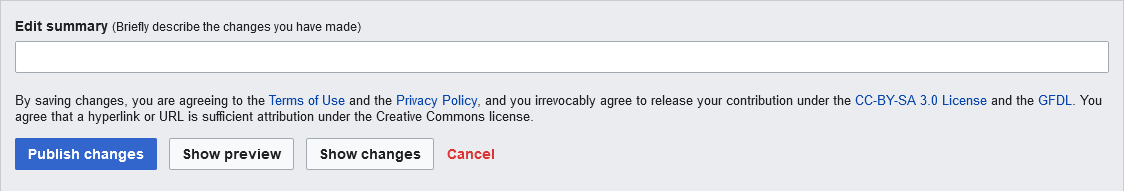

[edit]When editing an article on Wikipedia there is a small field labeled "Edit summary" under the main edit-box. It looks like this:

The text written here will appear on the Recent changes page, in the page revision history, on the diff page, and in the watchlists of users who are watching that article. See m:Help:Edit summary for full information on this feature.

Filling in the edit summary field greatly helps your fellow contributors in understanding what you changed, so please always fill in the edit summary field, especially for big edits or when you are making subtle but important changes, like changing dates or numbers. Thank you. —Mets501 (talk) 23:51, 15 June 2006 (UTC)

- You failed to provide edit summaries for basically all of your edits. Here are your most recent contributions. I see one edit summary. You do realize that just having the section title is not an edit summary, right? —Mets501 (talk) 23:52, 15 June 2006 (UTC)

- Listen, please just calm down. No offense was intended by simply alerting you about edit summaries. I was just informing you about Wikipedia policies. You chose to lash out at me for no apparant reason, and therefore I had to defend myself by posting the edits below. I am not here to torture you, just here to try and improve Wikipedia, and if I happen to see someone who doesn't use edit summaries often I will let them know with the edit summary notice. I'm sorry if you felt offended by my edit summary notice. —Mets501 (talk) 19:58, 16 June 2006 (UTC)

- Yes, let's move on. Consider this my last response :-). If you're curious, that edit summary notice was not written by me, but is the {{summaries}} template, and I didn't mean it if it sounded like I was calling you a "newbie". —Mets501 (talk) 02:38, 17 June 2006 (UTC)

- Listen, please just calm down. No offense was intended by simply alerting you about edit summaries. I was just informing you about Wikipedia policies. You chose to lash out at me for no apparant reason, and therefore I had to defend myself by posting the edits below. I am not here to torture you, just here to try and improve Wikipedia, and if I happen to see someone who doesn't use edit summaries often I will let them know with the edit summary notice. I'm sorry if you felt offended by my edit summary notice. —Mets501 (talk) 19:58, 16 June 2006 (UTC)

- 19:43, June 15, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Jogging at the speed of sound)

- 19:42, June 15, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→The end of the world...)

- 19:38, June 15, 2006 (hist) (diff) Wikipedia:Reference desk/Mathematics (→Word Puzzle) (top)

- 19:34, June 15, 2006 (hist) (diff) Wikipedia:Reference desk/Mathematics (→June 15)

- 16:36, June 15, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Java Image Editing)

- 12:51, June 15, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Java Image Editing)

- 11:58, June 15, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→IQ)

- 11:41, June 15, 2006 (hist) (diff) Axolotl (Repeat Reversion) (top)

- 22:18, June 14, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→New body)

- 22:15, June 14, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Self-aware)

- 22:00, June 14, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→June 15)

- 21:31, June 14, 2006 (hist) (diff) User talk:Black Carrot (→Misconfigured proxy)

- 21:30, June 14, 2006 (hist) (diff) User talk:Black Carrot (→Misconfigured proxy)

- 21:30, June 14, 2006 (hist) (diff) User talk:Black Carrot

- 21:29, June 14, 2006 (hist) (diff) User talk:Raven.x16 (→Rewriting Posts) (top)

- 21:29, June 14, 2006 (hist) (diff) User talk:Raven.x16 (→Rewriting Posts)

- 18:32, June 14, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→ant .5 mm)

- 12:55, June 14, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→The FDA has been tapping my phone calls)

- 20:58, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Mathematics (→Nifty Prime Finder Thingy)

- 18:48, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Mathematics (→June 13)

- 18:37, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Genetics)

- 18:32, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Frontbend)

- 16:55, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Language (→Rape Rooms)

- 16:52, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Language (→Another odd writing behaviour)

- 16:48, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Miscellaneous (→Measurements)

- 14:48, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Odd Trait)

- 13:45, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Odd Trait)

- 13:38, June 13, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Frontbend)

- 23:25, June 12, 2006 (hist) (diff) Wikipedia:Reference desk/Language (→June 13)

- 23:21, June 12, 2006 (hist) (diff) Wikipedia:Reference desk/Language (→June 13)

- 22:25, June 12, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→June 13)

- 22:16, June 12, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Popular Mechanics)

- 22:04, June 12, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Odd Trait)

- 22:03, June 12, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Odd Trait)

- 18:20, June 12, 2006 (hist) (diff) Wikipedia:Reference desk/Science (→Genetics)

- 18:08, June 12, 2006 (hist) (diff) Wikipedia:Reference desk/Mathematics (→These moths have been bugging me)

- 18:08, June 12, 2006 (hist) (diff) Wikipedia:Reference desk/Mathematics (→These moths have been bugging me)

Sequences of composites

[edit]Hi - Thanks for the post at Wikipedia:Reference desk/Mathematics re my number theory question. I really am interested in continuing this conversation if you're up for it. Email works for me. I suppose we could converse on talk pages if you'd prefer. Please let me know what you think. Thanks. -- Rick Block (talk) 23:49, 14 July 2006 (UTC)

- I hadn't run the numbers, but doing so I agree the limit based on the formula for the absolute number of prime candidates seems to grow faster than Π(p(i))/p(i). I'm not sure where you're getting the formulas for number of twin prime candidates (or those that differ by 4). And I'm not sure if you mean Product( p(i) - 2) or primorial(p(n)-2) (too many pi's floating around!).

- You're working on fixing your email? BTW - what do you do? I'm a computer scientist, not a mathematician. -- Rick Block (talk) 23:26, 15 July 2006 (UTC)

- Yes. I got your emails (thanks). It may be a day or two before I reply (other stuff on my mind at the moment). -- Rick Block (talk) 02:58, 24 July 2006 (UTC)

Bumpiness

[edit]No, sorry, I haven't had time to think about it much. I understand what you're saying, though. I'll give it some thought today, and maybe I can ask some other people about it. —Bkell (talk) 18:16, 3 August 2006 (UTC)

Number One

[edit]Hi. I wasn't sure if you saw my replies to your Real Genius Songquestion, so I thought I'd drop you a quick note here. --LarryMac 16:04, 22 September 2006 (UTC)

- I don't want to break any rules about copyright; I know that in general we can't put lyrics on WP pages, so I'm being cautious. I've put them up on my website for now: http://www.larrymac.org/NumberOneLyrics.txt

- When you ask how can you get the other stuff, do you mean the other songs? I think I got most of them back when Napster was "illegal." Yes, I have inconsistent views on copyright. --LarryMac 16:53, 23 September 2006 (UTC)

- Here is the link to the video on YouTube - http://www.youtube.com/watch?v=82MXT1owuLo

- The link you had provided in your original question listed the albums containing most of the songs. Some/most are probably out of print. If you have no qualms about using P2P file sharing services, I'm sure you can get most of them. --LarryMac 13:02, 25 September 2006 (UTC)

Belated response

[edit]It took me a while to notice that you had asked more at Wikipedia:Reference_desk/Archives/Science/2006_November_7#Evolution. I have since responded. Cheers, Scientizzle 21:48, 10 November 2006 (UTC)

- It took a while, but I've attmepted an in-depth response to your question on my talk page. -- Scientizzle 20:03, 28 November 2006 (UTC)

Cyclops

[edit]Hi there Black Carrot. Happy new year! Have you recently lost your sight? My email is in my profile if you'd prefer to talk off-wiki. Cheers Natgoo 13:26, 31 December 2006 (UTC)

- About a year and a half ago, in an accident that can only be described as unlikely. My right eye, in fact, which wound up reversing an 'irreversible' tendency towards myopia in my left, because it had no choice but to get in shape. Cursed money-grubbing spectacle-sellers. I'm trying to find information, assuming it exists, on scholarship opportunities for the hemiblind. Also, do you have depth perception? Black Carrot 16:09, 31 December 2006 (UTC)

- Ah - I don't know where you're from, but try searching for 'partially sighted' - this term is in use more than 'monocular blindness'. I have been eligible for certain things (tax rebates, subsidies, scholarships, free bus passes) but eligibility rules have generally changed against my favour as I've got older (I find now that much UK govt assistance relies on modifications to the home, for example, which I don't have or need). I have okay depth perception (I played netball and softball as a kid, and drive), but I lost my eye when I was very young (from retinoblastoma) and I think my brain has learned to compensate. I use the expected lack of depth perception when convenient, however, such as when playing pool :). Has this been a problem for you so far? Natgoo 21:36, 31 December 2006 (UTC)

- No, it seems not to be a great problem. The human brain is an amazing and complex thing, but the article on depth perception is pretty forthright in contending that it is impossible for us to have true depth perception. What I have is fine, and functional. Has your depth perception changed at all since the accident? You won't be able to see 3D movies, or those Magic Eye books, though. That is gone for good, I'm afraid! Have you had any luck finding scholarships or bursaries yet? Natgoo 21:37, 4 January 2007 (UTC)

Image copyright problem with Image:N38602286_30224669_2261.jpg

[edit]Thanks for uploading Image:N38602286_30224669_2261.jpg. The image has been identified as not specifying the copyright status of the image, which is required by Wikipedia's policy on images. If you don't indicate the copyright status of the image on the image's description page, using an appropriate copyright tag, it may be deleted some time in the next seven days. If you have uploaded other images, please verify that you have provided copyright information for them as well.

For more information on using images, see the following pages:

This is an automated notice by OrphanBot. For assistance on the image use policy, see Wikipedia:Media copyright questions. 08:07, 25 May 2007 (UTC)

Orphaned Image

[edit]I was sifting through unused images and found your frog: Image:Chameleon Frog.jpg. If you want to keep it, may I suggest using it either in an article or your user page? Otherwise it may get deleted. cheers. —Gaff ταλκ 19:29, 14 June 2007 (UTC)

Animals and pain

[edit]Here is Nimur's note on your post, and my reply. FYI.

One of the assumptions of behaviorist school of psychological thought is that the behaviors are inherently dispassionate. The notion of isolating a specific stimulus and a specific response is hardly a complete theory of psychology, for animal subjects or for humans. Our psychology article has a good section on the rise of behaviorist thought and some of the later ideas that it spawned. Maybe this will give you some context - there are definitely realms where the simplistic experimental view of single-stimulus, single-behavior, single-response mappings do not really hold well, and you have described exactly such a case. Nimur (talk) 16:17, 14 May 2008 (UTC)

What does this sort of pychobabble mean, Nimur? If you are saying that animals feel no pain, then why bother with the animal cruelty laws? And as post-Darwin we all know that humans are animals as well, why should we get upset concerning human suffering? If someone forced your hand into a pot of boiling water and observed your sweating and screaming and face-pulling, would you approve of his dismissal of your extreme, albeit subjective, pain as an unscientific phenomenon? I would have thought this sort of uber-“scientific” rubbish had been thoroughly refuted by now. Here is a self-explanatory par I added to the talk page of Cetacean intelligence. As the page is transcluded now, I am posting both Nimur and OP Black Carrot this note on their talk pages.

With ref to Michele Bini’s comment above, dolphins are NOT the only animals that engage in self-destructive behaviour when panic-stricken or in extreme pain. All the ‘higher mammals’ including horses, cats, dogs and apes can present with human-like symptoms of severe stress. Dogs which lose a much-loved master can show every sign of ‘nervous breakdown’ and clinical depression, both behaviorally and physically. Other mammals will go on rampages, chew their own fur and eat their own excrement, refuse food and howl incessantly. Apes will throw themselves against their cages. In a series of notorious but well-conducted experiments of the 1960s, researchers tormented dogs to the point where they not only had ‘breakdowns’ but showed every sign of having become permanently insane through terror and pain. These were ‘higher’ animals. I have no idea whether you can make a butterfly mad, or drive a snail to distraction. Myles325a (talk) 04:36, 20 February 2008 (UTC) Retrieved from "http://en.wikipedia.org/wiki/Talk:Cetacean_intelligence"

Hi Nimur, thanks for your note re” “what you really meant”. Find below my response, which I am also posting to your Talk Page, and to OP Black Carrot.

There are many things in life that make me want to get down on the ground and pull up carpet tacks with my teeth, and one of the main ones is people who write or say something that reads as muddled or dead wrong and then, after they are taken to task for it, aver that the critic has misunderstood what was intended. The ensuing “debate” takes up everyone’s time, and WP talk pages, and archives, and bandwidth, and patience, and produces much more heat than light.

Your contributions, Nimur, are a copybook example of just such a skew-whiff dialogue. As WP is full of people waving hands and employing phrases that could mean any number of things, I will try to show you why writing clear English and saying EXACTLY what you mean in as concise and unambiguous way as you can, will render you far less misunderstood than you are at the moment. Before I continue might I ask you and others to re-read this and the preceding paragraph? You might not AGREE with the content, but is there anything at all there which you find less than crystal clear? Good. Then let's pass on to your recent offerings. I have put your words in bold type and my own comments in plain.

One of the assumptions of behaviourist school of psychological thought is that the behaviours are inherently dispassionate. Now what significance does dispassionate take on here? Let's not beat around the bush. Does this mean that animals don't feel pain, or that behaviourists don't believe they do, or don't care if they do, or don't believe that pain exists, or don't believe that animals feel pain the way humans do, or consider the subject of subjective feelings of pain as a metaphysical hobbyhorse external to scientific research?

And why would you opt for the murky term "dispassionate" rather than write "behaviours are inherently without emotion"? Dispassionate muddies the waters in this context because it can ALSO mean impartial, disinterested and the like. Are you using it as a "weasel word" because while it connotes "without emotion" it also tempers it with a soupcon of "impartial". Are the animal behaviours themselves "dispassionate" or are the scientists "dispassionate", or is it the methodology itself?

And what is the force of "inherently" here? How is "behaviours are inherently dispassionate" different from the shorter "behaviours are dispassionate". The addition of inherently would suggest that while some external observers might perceive certain animal behaviour as "passionate", objectively, that is "inherently", they are not. If this is not the meaning you intended, perhaps you could explain what you DID intend.

Now let us look at there are definitely realms where the simplistic experimental view of single-stimulus, single-behaviour, single-response mappings do not really hold well… Frankly, Nimur, there are NO interesting cases of animal, or human behaviour, which conform to the single-stimulus, single-response case. I spent a year of a University Psych course under a fanatical behaviourist learning about mice pushing levers for food. In the end, I ascertained that the good Professor preferred to record the highly circumscribed behaviour of mice because, as a scientist, he could not neatly explain what it is that HUMANS were doing, and as a border-line autistic, he was only dimly aware of the world of human experience, and cared even less for it.

A mouse pushes a lever and gets a food pellet. Great! Now, Cindy likes going out and often says "Gee, swell! When do you want to pick me up?" when she gets an invite, uh, sorry, the stimulus of a speech segment over a phone to that effect. But today, Cindy got just such a stimulus and replied to the effect that she was doing her hair. Now, we airy-fairy metaphysical types might just say that's because Cindy did not think that the boy who phoned was a "real spunk rat" or a "hunk". But, and I quote my erstwhile teacher on this, scientifically it should be said that there were "intervening variables" between the stimulus and the response, which made Cindy behave differently. What an absolute laff riot!! Everything—but everything—interesting in this episode lies in these "intervening variables". And as for Cindy, so for all humans, and the great bulk of life. It is another example of weasel words for you to say that the single stimulus – single response model does not (in some cases) not really well. Apart from jumping up when you sit on a tack and the like, there is NOTHING in life which can be described by such a mechanism, and it is an absolute indictment of the entire psychological "profession" that it was not laughed out of business when it was first proposed. So, do tell us, Nimur, what does "does not hold really well" mean?

Nimur, if you cannot quantify the stimulus – response model in life, can you at least quantify how often and in what circumstances human responses DO conform to such models? After all, if we are unable to record and quantify human responses, then we should be able to at least quantify the success to failure ratio of its predictions. My own estimate is that, after all those experiments, the success rate is close to zero. Millions of mice and pigeons pecked and pushed at levers and ran down mazes, and in the wash-up, decades later, I think it was B.F. Skinner himself who gave a description of how behaviourist theory can have some practical significance. Some college was having problems with student stragglers coming late for lunch and thus keeping kitchen staff waiting. Skinner suggested ringing a bell that would summon the students and then denying lunch to those who came more than 30 minutes later! Yes, we are indebted to Skinner and the behaviourists for this and many other such breakthroughs.

You end your piece on another weasel note. After noting that you did not say that animals did not feel pain, you finish with I don't see in any way how this has anything to do with the capacity for the animal to feel pain, nor the ethics of animal testing While OP Black Carrot's original post does not directly deal with animal cruelty, it broaches the subject of highly stressful / painful experiments in which an animal is rewarded and punished randomly for the same behaviour, and links it directly will existential pain felt by humans who might be exposed to such treatment in a social setting. It is hard to credit that you really have no idea how any of that can impinge on the broader topic of animal cruelty.

Moreover, I do not see why you simply do not declare yourself and say that what it is that you DO believe in this regard. Why duck the issue of animal (or indeed) human pain by sweeping it under the carpet of "complex behaviours"? And the question of animal pain is not a red herring. Descartes publicly propounded the theory that animals were no more than machines—a prototypically behaviourist notion—and that the noises they made when they were killed were no different in type to those of a creaking wheel. France, which still venerates Descartes has—for a European nation—a backward attitude toward animal suffering precisely for this reason.

But to get back to the main thread. Nimur, if you said exactly what you INTENDED clearly and without ambiguous weasel-words, then you would find yourself misunderstood on fewer occasions. As it is, if I have misunderstood you, then the preceding will give you fair indication why. Myles325a (talk) 05:02, 17 May 2008 (UTC)

Probability

[edit]Carrot, Did you ever get an adequate handle on random sequence as described at User_talk:Fuzzyeric#Probability? -- Fuzzyeric (talk) 22:59, 17 May 2008 (UTC)

File:Case 5 Diagram 2.jpg listed for deletion

[edit]An image or media file that you uploaded or altered, File:Case 5 Diagram 2.jpg, has been listed at Wikipedia:Files for deletion. Please see the discussion to see why this is (you may have to search for the title of the image to find its entry), if you are interested in it not being deleted. Thank you. Skier Dude (talk) 05:03, 9 February 2009 (UTC)

File:Case 5 Diagram 3.jpg listed for deletion

[edit]An image or media file that you uploaded or altered, File:Case 5 Diagram 3.jpg, has been listed at Wikipedia:Files for deletion. Please see the discussion to see why this is (you may have to search for the title of the image to find its entry), if you are interested in it not being deleted. Thank you. Skier Dude (talk) 05:03, 9 February 2009 (UTC)

Found the paper

[edit]Try Beklemishev, L. D. (2003). "Proof-theoretic analysis by iterated reflection". Arch. Math. Logic. 42: 515–552. doi:10.1007/s00153-002-0158-7. --Trovatore (talk) 01:23, 12 June 2011 (UTC)

File:SinfestCalligraphy03042007.jpg listed for deletion

[edit]A file that you uploaded or altered, File:SinfestCalligraphy03042007.jpg, has been listed at Wikipedia:Files for deletion. Please see the discussion to see why this is (you may have to search for the title of the image to find its entry), if you are interested in it not being deleted. Thank you. Sfan00 IMG (talk) 19:36, 13 July 2013 (UTC)

Notification of automated file description generation

[edit]Your upload of File:Case 5 Diagram 4.jpg or contribution to its description is noted, and thanks (even if belatedly) for your contribution. In order to help make better use of the media, an attempt has been made by an automated process to identify and add certain information to the media's description page.

This notification is placed on your talk page because a bot has identified you either as the uploader of the file, or as a contributor to its metadata. It would be appreciated if you could carefully review the information the bot added. To opt out of these notifications, please follow the instructions here. Thanks! Message delivered by Theo's Little Bot (opt-out) 15:03, 14 March 2014 (UTC)

- Another one of your uploads, File:Case 5 Diagram.jpg, has also had some information automatically added. If you get a moment, please review the bot's contributions there as well. Thanks! Message delivered by Theo's Little Bot (opt-out) 15:08, 15 March 2014 (UTC)